Compare: The Best LangSmith Alternatives & Competitors

Observability tools like LangSmith allow developers to monitor, analyze, and optimize AI model performance, which helps overcome the “black box” nature of LLMs.

However, as LangSmith’s on-premise version becomes expensive, users are seeking more flexible alternatives. But which LangSmith alternative is the best in 2024? We will shed some light.

Top LLM observability tools (updated July 2024)

- Helicone

- Phoenix by Arize

- Langfuse

- HoneyHive

- OpenLLMetry by Traceloop

LangSmith Competitors Overview

| Feature | LangSmith | Helicone | Phoenix by Arize AI | Langfuse | HoneyHive | OpenLLMetry by Traceloop |

|---|---|---|---|---|---|---|

| Open-source | - | ✅ | ✔ | ✔ | - | ✔ |

| Self-hosted | - | ✅ | - | ✔ | - | - |

| Prompt Templating | ✔ | ✅ | ✔ | ✔ | ✔ | ✔ |

| Agent Tracing | ✔ | ✅ | ✔ | ✔ | ✔ | ✔ |

| Experiments | ✔ | ✅ | ✔ | ✔ | ✔ | ✔ |

| Cost Analysis | ✔ | ✅ | ✔ | ✔ | ✔ | - |

| Evaluation | ✔ | - | ✔ | - | - | ✔ |

| User Tracking | ✔ | ✅ | - | ✔ | ✔ | ✔ |

| Feedback Tracking | ✔ | ✅ | - | ✔ | ✔ | ✔ |

| LangChain Integration | ✔ | ✅ | ✔ | ✔ | ✔ | ✔ |

| Flexible Pricing | - | ✅ | - | ✔ | ✔ | ✔ |

| Image support | - | ✅ | - | - | - | - |

| No payload limitations | - | ✅ | - | - | - | - |

| Dashboard | ✔ | ✅ | ✔ | ✔ | ✔ | ✔ |

| Data Export | ✔ | ✅ | ✔ | ✔ | ✔ | - |

1. Helicone

Designed for: developers & analysts

![]()

![]()

What is Helicone?

Helicone is an open-source LLM observability and monitoring platform purpose-built for developers to monitor, debug, and optimize their LLM applications. With the flexibility to be self-hosted or used as a gateway with a simple 1-line integration, it provides instant insights into latency, costs, time to first tokens (TTFT) and more.

Top features

- Sessions - Group, track and visualize multi-step agent workflows and LLM interactions.

- Prompts & Experiments - Version and test prompts, then compare outputs before going in production.

- Custom properties - Segment data to understand your users better.

How does Helicone compare to LangSmith?

| LangSmith | Helicone | |

|---|---|---|

| Open-source | - | ✅ |

| Self-hosted | - | ✅ |

| Prompt Templating | ✔ | ✅ |

| Agent Tracing | ✔ | ✅ |

| Experiments | ✔ | ✅ |

| Cost Analysis | ✔ | ✅ |

| Evaluation | ✔ | - |

| User Tracking | ✔ | ✅ |

| Feedback Tracking | ✔ | ✅ |

| LangChain Integration | ✔ | ✅ |

| Flexible Pricing | - | ✅ |

| Image support | - | ✅ |

| No payload limitations | - | ✅ |

| Dashboard | ✔ | ✅ |

| Data Export | ✔ | ✅ |

LangSmith currently primarily focuses on text-based LLM applications, with extensive tools for testing, monitoring, and debugging these applications, while Helicone offers support for text and image inputs and outputs.

Why are companies choosing Helicone?

Open-Source & Self-Hosting

Helicone is fully open-source and free to start. Companies can also self-host Helicone within their infrastructure. This ensures that you have full control over the application, flexibility and customization tailored to specific business needs. On the other hand, the self-host option is only available for users on enterprise plan for LangSmith.

Cost-Effective

Helicone is also more cost-effective than LangSmith as it operates on a volumetric pricing model. This means companies only pay for what they use (while the first 100k requests every month are free), which makes Helicone an easy and flexible platform for businesses to get started and scale their applications.

Scalable & Reliable

Helicone can also handle a large volume of requests, making it a dependable option for businesses with high traffic. Acting as a Gateway, Helicone offers a suite of both middleware and advanced features such as caching, prompt thread detection and vaults to securely share API keys.

Companies that are highly responsive to market changes or opportunities often use Helicone to achieve production quality faster.

Bottom Line

If you need something that “just works” so you can get back to shipping features, Helicone has the core functionality to help you get started instantly.

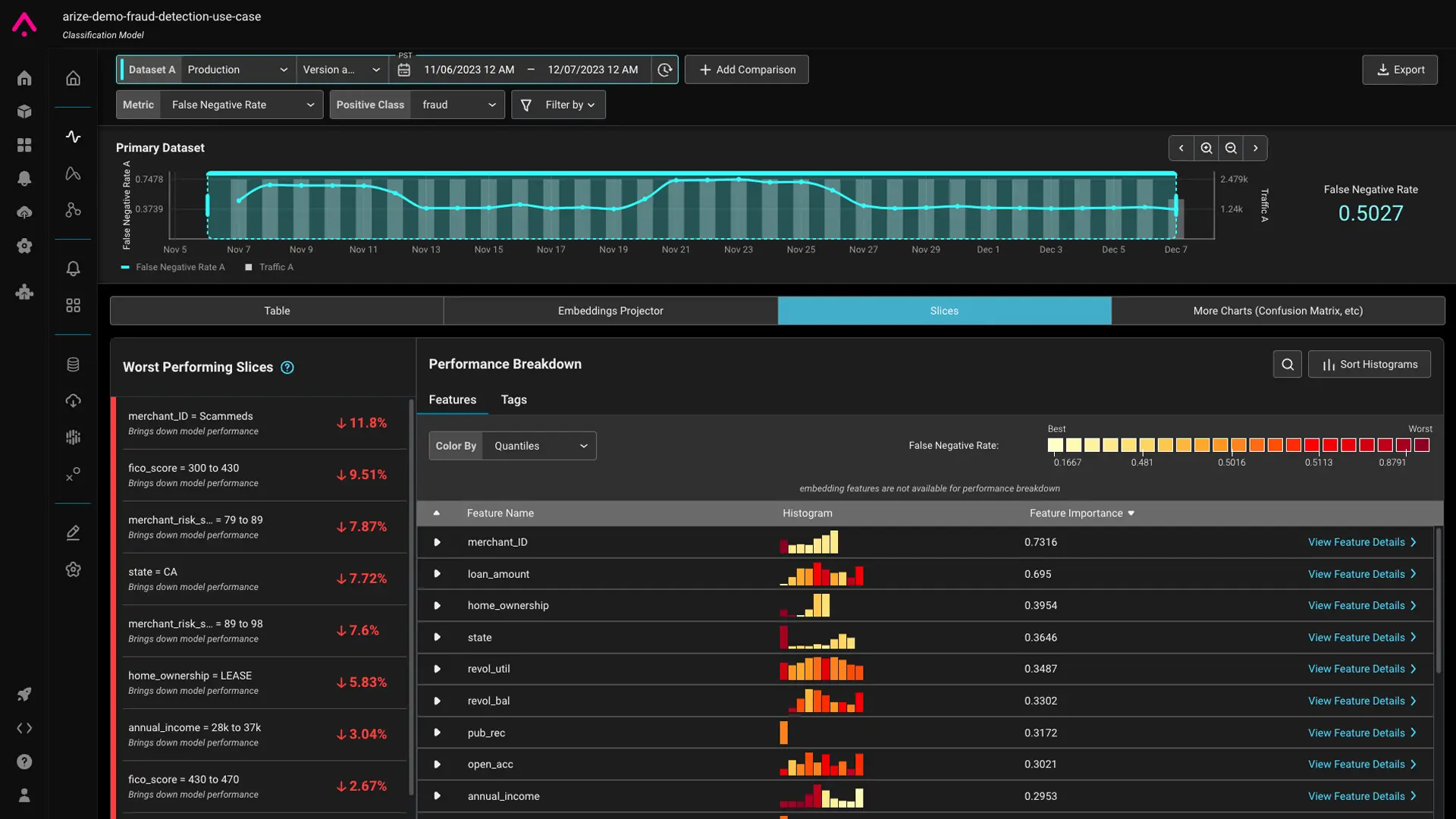

2. Phoenix by Arize AI

Designed for: ML engineers & ML Ops team

What is Arize AI?

Phoenix by Arize AI is known for its strong focus on machine learning model monitoring and explainability. If your company prioritizes understanding model performance in production, detecting model drift, and getting detailed explanations of model predictions, Arize AI might be the better choice.

Top features

- Evaluations - Judge the quality of your LLM outputs on relevance, hallucination %, and latency.

- Traces - Get visibility into the lifecycle of predictions, monitor and analyze performance, identify root cause for machine learning models.

- Datasets & Experiments - Understand how a change will affect performance, and test on a specific dataset.

How does Arize AI compare to LangSmith?

| Feature | LangSmith | Phoenix by Arize AI |

|---|---|---|

| Open-source | - | ✔ |

| Self-hosted | - | - |

| Prompt Templating | ✔ | ✔ |

| Agent Tracing | ✔ | ✔ |

| Experiments | ✔ | ✔ |

| Cost Analysis | ✔ | ✔ |

| Evaluation | ✔ | ✔ |

| User Tracking | ✔ | - |

| Feedback Tracking | ✔ | - |

| LangChain Integration | ✔ | ✔ |

| Flexible Pricing | - | - |

| Image support | - | - |

| No payload limitations | - | - |

| Dashboard | ✔ | ✔ |

| Data Export | ✔ | ✔ |

Why are companies choosing Arize AI?

Machine Learning Observability

Arize AI specializes in real-time monitoring and performance optimization of machine learning models with comprehensive insights into model behavior and data drift.

Ease of Integration

Arize AI stands out for its support for integration with various machine learning frameworks to help streamline the process of setting up and monitoring models. It also provides visualizations and analytics to help understand model behaviors and impact.

Designed for ML Engineers & ML Ops Team

Arize AI attracts users who need robust ML monitoring, explainability, and scalability, primarily data scientists, ML engineers, and ML ops teams, whereas LangSmith appeals to software engineers, content creators, and researchers who are focused on building and applying language models in different contexts.

Bottom Line

For developers focused on enhancing model performance, Arize AI stands out due to its capabilities in monitoring and analyzing model performance. However, it’s worth noting that Arize AI’s emphasis may not include traditional user feedback tracking, such as gathering user comments or sentiment.

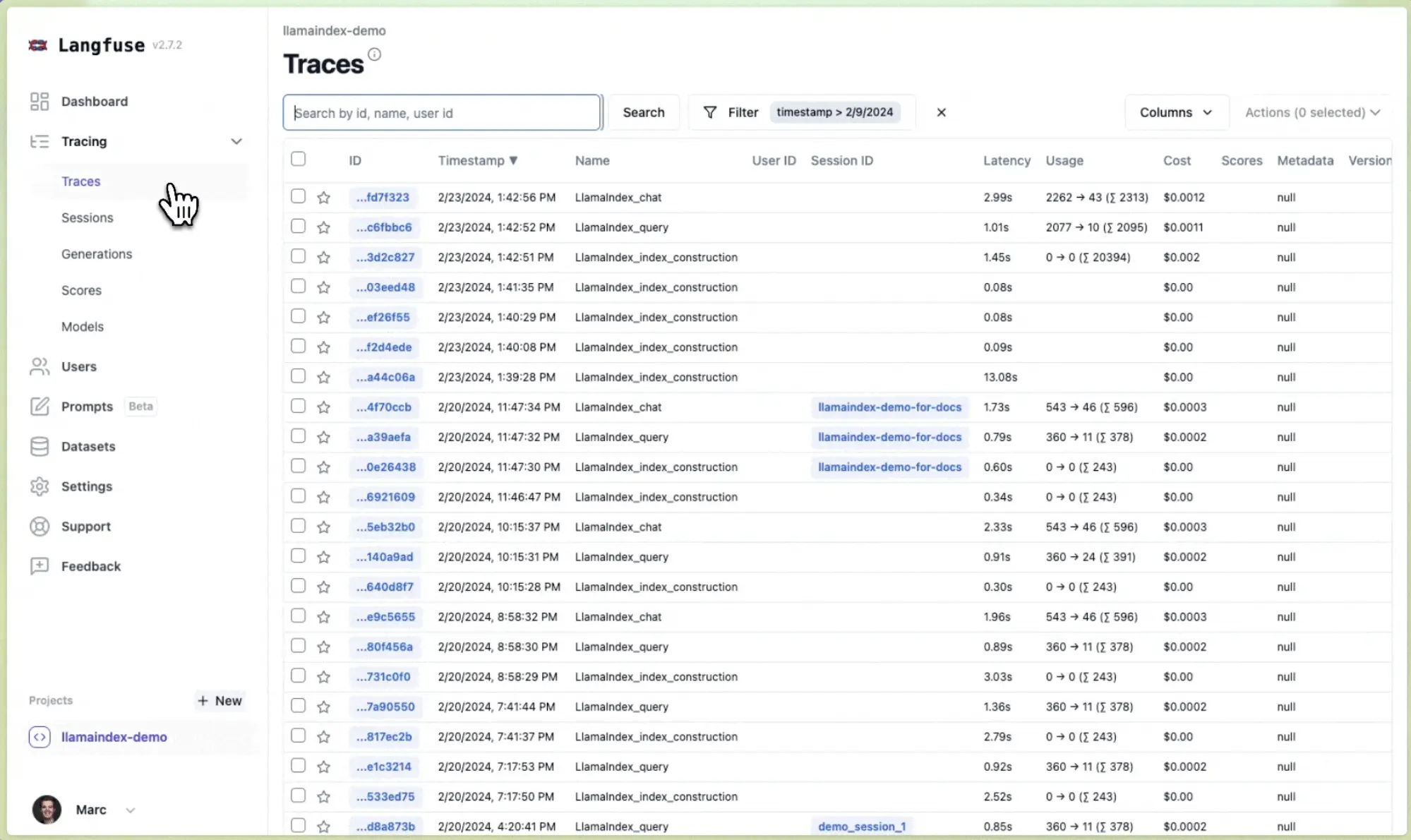

3. Langfuse

Designed for: developers & analysts

What is Langfuse?

Langfuse is an open-source LLM Engineering Platform that helps to trace & debug LLM models. It provides observability, metrics, evals, prompt management and a playground and to debug and improve LLM apps.

Top features

- Tracing - made for agents & LLM chains. You can trace unlimited nested actions and get a detailed view of the entire request, including non-LLM actions such as database queries, API calls that lead to the response for optimal visibility into issues.

- Scoring production traces - measuring quality with user feedback, model-based evaluation, manual labelling and others.

- Montioring and Logging - detailed logging to track all interactions with the language model, error tracking for debugging, and usage analytics to optimize deployment.

How does Langfuse compare to LangSmith?

| Feature | LangSmith | Langfuse |

|---|---|---|

| Open-source | - | ✔ |

| Self-hosted | - | ✔ |

| Prompt Templating | ✔ | ✔ |

| Agent Tracing | ✔ | ✔ |

| Experiments | ✔ | ✔ |

| Cost Analysis | ✔ | ✔ |

| Evaluation | ✔ | - |

| User Tracking | ✔ | ✔ |

| Feedback Tracking | ✔ | ✔ |

| LangChain Integration | ✔ | ✔ |

| Flexible Pricing | - | ✔ |

| Image support | - | - |

| No payload limitations | - | - |

| Dashboard | ✔ | ✔ |

| Data Export | ✔ | ✔ |

Why are companies choosing Langfuse?

Open-Source Flexibility

Langfuse is open-source, which means it offers flexibility for customization and adaptation to specific organizational needs without vendor lock-in.

Cost-Effectiveness

Langfuse can be more cost-effective compared to LangSmith, which requires investment in enterprise plans for full feature access and support.

Framework-agnostic tracing capabilities

Langfuse offers comprehensive tracing capabilities that are model and framework agnostic. It allows for capturing the full context of LLM applications, including complex and chained calls, which simplifies debugging and pinpointing issues across extended control flows, while specific features like automated instrumentation for frameworks may require additional setup or integration effort using LangSmith.

Bottom Line

Langfuse is a good choice for teams looking to improve their LLM applications with a simple and cost-effective tool, but may be limited for larger teams who want a scalable solution or enterprise features.

4. HoneyHive

Designed for: developers & analysts

What is HoneyHive?

HoneyHive AI evaluates, debugs, and monitors production LLM applications. It lets you trace execution flows, customize event feedback, and create evaluation or fine-tuning datasets from production logs.

It is built for teams who want to build reliable LLM products because it focuses on observability through performance tracking.

Top features

- Trace - Log all AI application data to debug execution steps as you iterate.

- Evaluate - Evaluations SDK for flexible offline evaluations across various LLM applications

- Annotate Logs - Involve domain experts to review and annotate logs.

HoneyHive’s tracing functionality includes support for multi-modal data, which encompasses image processing. This feature allows you to trace functions that handle various types of data, including images.

How does HoneyHive compare to LangSmith?

| Feature | LangSmith | HoneyHive |

|---|---|---|

| Open-source | - | - |

| Self-hosted | - | - |

| Prompt Templating | ✔ | ✔ |

| Agent Tracing | ✔ | ✔ |

| Experiments | ✔ | ✔ |

| Cost Analysis | ✔ | ✔ |

| Evaluation | ✔ | - |

| User Tracking | ✔ | ✔ |

| Feedback Tracking | ✔ | ✔ |

| LangChain Integration | ✔ | ✔ |

| Flexible Pricing | - | ✔ |

| Image support | - | - |

| No payload limitations | - | - |

| Dashboard | ✔ | ✔ |

| Data Export | ✔ | ✔ |

Bottom Line

HoneyHive provides access to 100+ open-source models in their Playground through integrations for testing purpose. However, if you want a solution that allows you to plug and play, it’s worth it to look into other solutions.

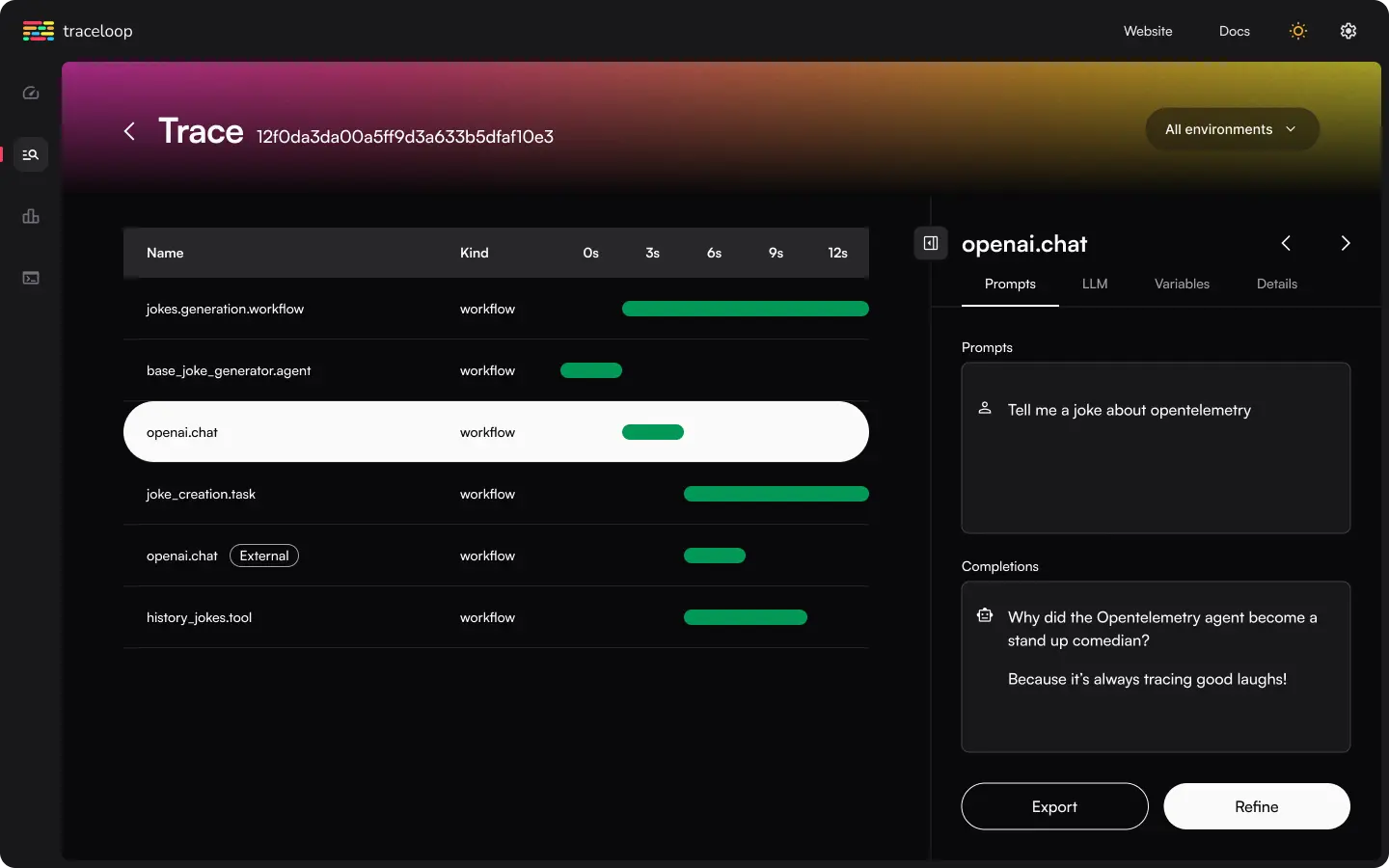

5. OpenLLMetry by Traceloop

Designed for: developers & analysts

What is OpenLLMetry?

OpenLLMetry is an open-source framework developed by Traceloop, that simplifies the process of monitoring and debugging Large Language Models. It is built on top of OpenTelemetry, ensuring non-intrusive tracing and seamless integration with leading observability platforms and backends like KloudMate.

OpenLLMetry aims to standardize the collection of mission-critical LLM metrics, spans, traces, and logs through OpenTelmetry.

Top features

- Tracing - Traceloop SDK supports several ways to annotate workflows, tasks, agents and tools in your code to get a more complete picture of your app structure.

- Prompt versioning - User feedback → Simply log a user feedback on the result of your LLM workflow by calling Traceloop’s Python SDK or Typescript SDK to.

How does Traceloop compare to LangSmith?

| Feature | LangSmith | OpenLLMetry by Traceloop |

|---|---|---|

| Open-source | - | ✔ |

| Self-hosted | - | - |

| Prompt Templating | ✔ | ✔ |

| Agent Tracing | ✔ | ✔ |

| Experiments | ✔ | ✔ |

| Cost Analysis | ✔ | - |

| Evaluation | ✔ | ✔ |

| User Tracking | ✔ | ✔ |

| Feedback Tracking | ✔ | ✔ |

| LangChain Integration | ✔ | ✔ |

| Flexible Pricing | - | ✔ |

| Image support | - | - |

| No payload limitations | - | - |

| Dashboard | ✔ | ✔ |

| Data Export | ✔ | - |

Bottom Line

Traceloop focuses on evaluation and the pricing reflects that, thus can become expensive as your application scale to log more traces.

Frequently Asked Questions

Q: Which LangSmith alternative is best for open-source and self-hosting?

Helicone and Langfuse are both open-source and offer self-hosting options, giving you full control over your data and infrastructure.

Q: How do these tools handle data privacy and security?

- Helicone: Being open-source and self-hostable, Helicone allows you to keep all data within your infrastructure, enhancing security.

- Langfuse: Also open-source and self-hostable, offering similar security advantages.

- Phoenix by Arize AI: Offers enterprise-grade security features but is not self-hostable.

- HoneyHive: Does not offer self-hosting; review their privacy policies for data handling practices.

- OpenLLMetry by Traceloop: Open-source but not self-hosted; integration with observability platforms may affect data control.

Q: Are there free options available among these tools?

- Helicone: Offers a free tier with up to 100,000 requests per month.

- Langfuse: Free to start with flexible pricing options.

- Phoenix by Arize AI: May offer free trials; pricing details should be confirmed on their website.

- HoneyHive: Flexible pricing with possible free tiers.

- OpenLLMetry by Traceloop: Open-source but may incur costs as you scale.

Q: Do these tools support LangChain integration?

Yes, all the mentioned tools offer integration with LangChain, facilitating seamless incorporation into your existing workflows.

Q: Are there limitations on payload sizes or types?

Helicone stands out by supporting both text and image inputs and outputs without payload limitations. Other tools may have restrictions, so it’s important to review their documentation for specifics.

Q: Which Tool is Best for ML Engineers and MLOps Teams?

Phoenix by Arize AI is specifically designed for ML engineers and MLOps teams, focusing on model monitoring and explainability.